I decided that one of the features of one of the plugins really ought to be its own plugin. So I extracted it to this package: HexagonSoftware.SpecFlowPlugins.FilterCategoriesDuringGeneration.

The new plugin is a lot more powerful than the old tag-stripping feature and works in a much more meaningful way.

The old feature literally stripped tags out of a scenario or feature. That means assumptions made about tags present in generated tests could be violated. If you wrote a binding that was coupled to the presence of a tag and then stripped that tag out (because you use it as a control tag), the binding would cease to be found.

The new plugin doesn't have that problem. It works by decoupling test-category generation from the tags in a scenario or feature file.

It has two opposing features: category-suppression and category-injection.

Suppression (right now) works by applying a set of regular expressions to each tag. If any of these regular expressions matches, category-generation is suppressed for that tag.

Alternatively, injection adds one or more tags to every test generated for a particular project.

The exact details can be view in the readme.txt that pops up when you install the package but I'll give a brief example, here. As with all my SpecFlow plugins, it is controlled by a .json file.

Here is an example from one of my test assemblies:

{

"injected-categories": [ "Unit Tests" ],

"suppress-categories": {

"regex-patterns": [ "^BindAs:", "^Dialect:", "^Unbind:" ]

}

}

The regex patterns for suppressed-categories each select a different prefix. If that's not obvious to you, here's my favorite source for exploring the regular expression syntax. Anyway, this allows me to simply and concisely select who families of tags for which category-generation should be suppressed.

The injected categories are injected directly as categories into generated tests verbatim.

What this does, when paired with the other plugins that allow for (tag-based) selective sharing of scenarios among multiple test assemblies, is allow me to "rewrite" the categories for a generated assembly.

Even though I use tags to select certain dialects into particular scenarios and to control which scenario manifest in which gate, this is what my unit test list looks like when it's grouped by category, like so.

|

| A clean categories list despite my use of dozens of tags for various control purposes |

As you can see above (and, in more detail, below), un-rewritten tags still show up as categories. So a single test might end up in two categories but only when that's what I really want.

|

| WillTakeDeathStepQuickly and WillTakeKillShotQuickly both show up in Unit Tests and Slow |

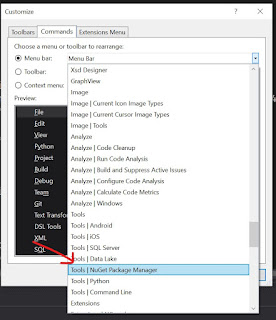

As with all things on NuGet.org, this plugin is free. So, if you think it can help you, you don't have much to lose by downloading it and giving it a try.